Multilingual AI ensures that knowledge is made accessible and actionable across linguistic divides.

READ MOREMultilingual Document Classification for AI Revolutionizing Global Customer Support: The Power of Multilingual Retrieval Augmented Generation (M-RAG)

READ MOREM-RAG: Retrieval Augmented Generation Goes Global Learn how to copy and mirror the configuration of one repository to another.

READ MOREHow to Save and Transfer Repository Configuration Achieve an automated, custom export of all or parts of a repository via a simple four-step process.

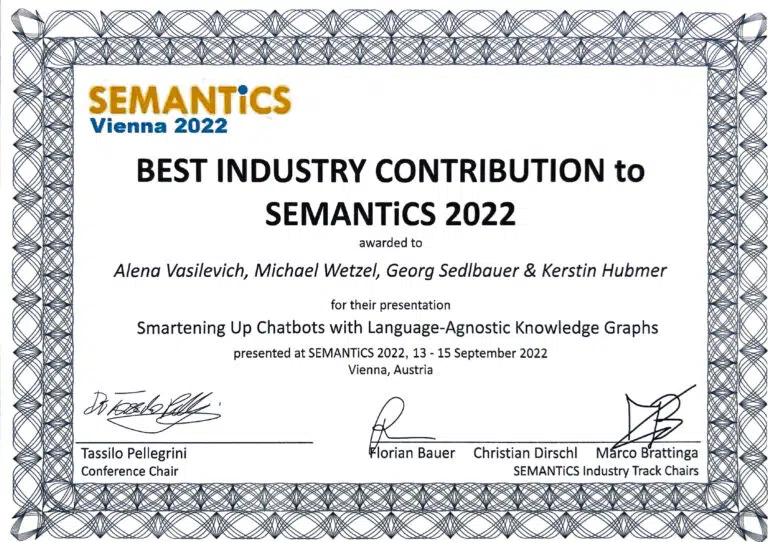

READ MOREAutomate Exports Of Custom Data Into Another Solution Coreon and Vienna Business Agency were presented with a Best Industry Contribution Award at the Semantics 2022 conference for our work on how multilingual knowledge graphs improve chatbots.

READ MORESemantics 2022: Coreon & Partners Win Best Industry Contribution Award